AI deepfake tricks Democrats as laws lag behind improper use of ‘digital twins’

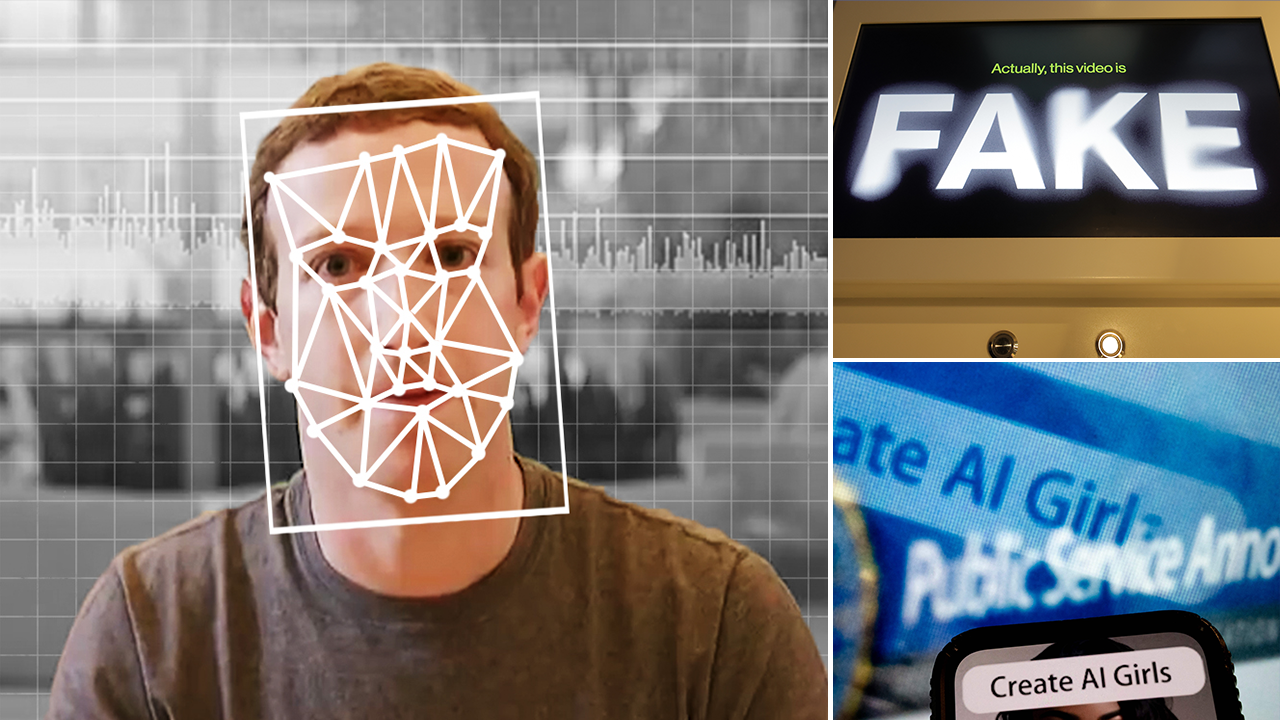

Artificial intelligence (AI) is producing hyperrealistic “digital twins” of politicians, celebrities, pornographic material, and more – leaving victims of deepfake technology struggling to determine legal recourse.

Former CIA agent and cybersecurity expert Dr. Eric Cole told Fox News Digital that poor online privacy practices and people’s willingness to post their information publicly on social media leaves them susceptible to AI deepfakes.

“The cat’s already out of the bag,” he said.

“They have our pictures, they know our kids, they know our family. They know where we live. And now, with AI, they’re able to take all that data about who we are, what we look like, what we do, and how we act, and basically be able to create a digital twin,” Cole continued.

KEEP THESE TIPS IN MIND TO AVOID BEING DUPED BY AI-GENERATED DEEPFAKES

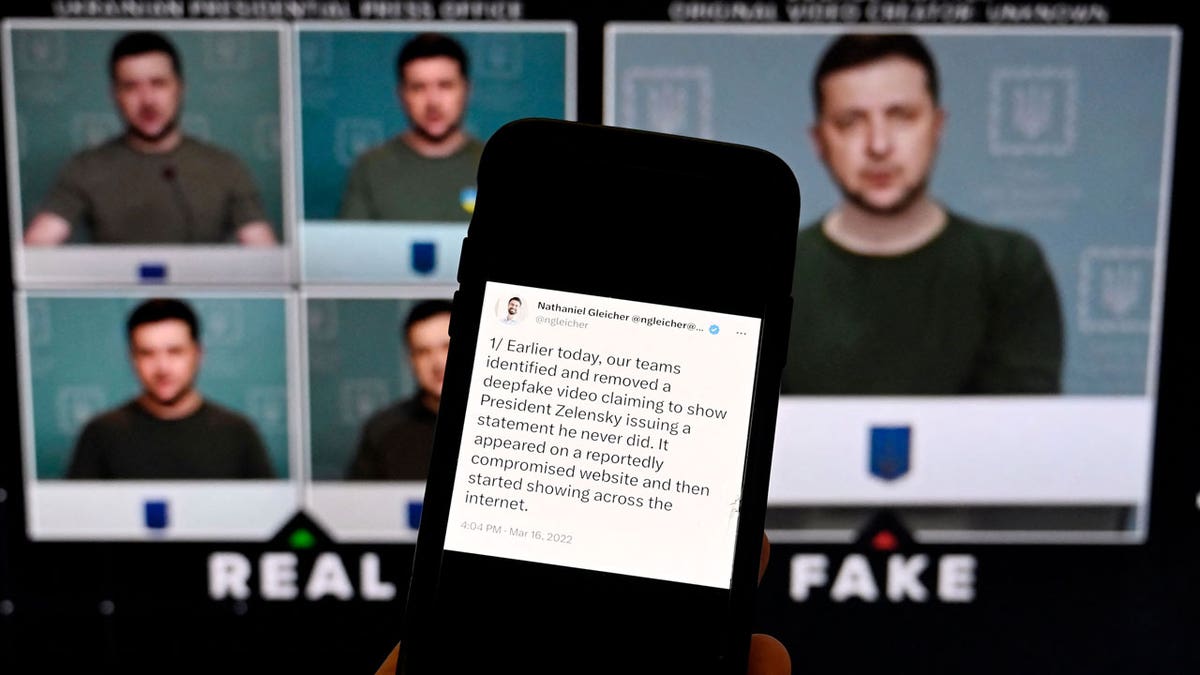

AI-generated images, known as “deepfakes,” often involve editing videos or photos of people to make them look like someone else or use their voice to make statements they never uttered in reality. (Elyse Samuels/The Washington Post/Lane Turner/The Boston Globe/STEFANI REYNOLDS/AFP via Getty Images)

That digital twin, he claimed, is so good that it is hard to tell the difference between the artificial version and the real person the deepfake is based on.

Last month, a fraudulent audio clip circulated of Donald Trump Jr. suggesting that the U.S. should have sent military equipment to Russia instead of Ukraine.

The post was widely discussed on social media and appeared to be a clip from an episode of the podcast “Triggered with Donald Trump Jr.”

Experts in digital analysis later confirmed that the recording of Trump Jr.’s voice was created using AI, noting that the technology has become more “proficient and sophisticated.”

FactPostNews, an official account of the Democratic Party, posted the audio as if it was authentic. The account later deleted the recording. Another account, Republicans against Trump, also posted the clip.

In the last several years, numerous examples of AI deepfakes have been used to mislead viewers engaging with political content. A 2022 video showed what appeared to be Ukrainian President Volodymyr Zelenskyy surrendering to Russia – but the fake clip was poorly made and only briefly spread online.

Manipulated videos of President Donald Trump and former President Joe Biden later appeared in the run-up to the 2024 U.S. Presidential Election. Based on existing videos, these clips often altered Trump and Biden’s words or behaviors.

AI-GENERATED PORN, INCLUDING CELEBRITY FAKE NUDES, PERSIST ON ETSY AS DEEPFAKE LAWS ‘LAG BEHIND’

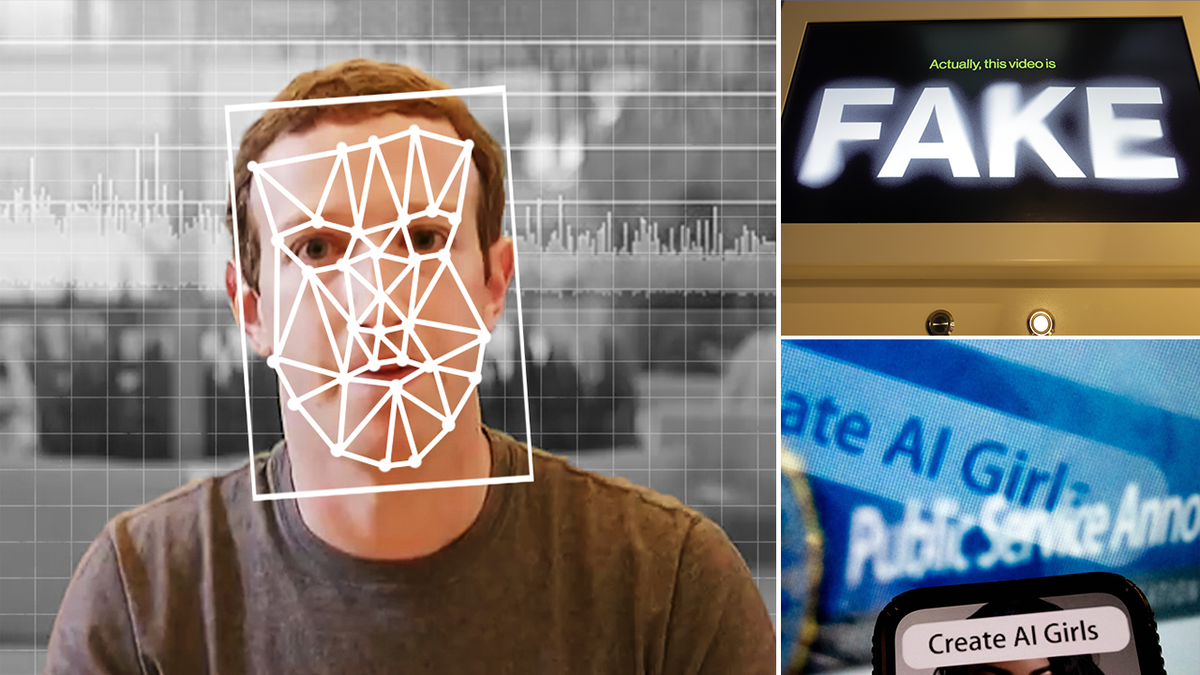

A woman in Washington, D.C., views a manipulated video on January 24, 2019, that changes what is said by President Donald Trump and former president Barack Obama, illustrating how deepfake technology has evolved. (Rob Lever /AFP via Getty Images)

AI-generated images, known as “deepfakes,” often involve editing videos or photos of people to make them look like someone else by using AI. Deepfakes hit the public’s radar in 2017 after a Reddit user posted realistic-looking pornography of celebrities to the platform, opening the floodgates to users employing AI to make images look more convincing and resulting in them being more widely shared in the following years.

Cole told Fox News Digital that people are their “own worst enemy” regarding AI deepfakes, and limiting online exposure may be the best way to avoid becoming a victim.

However, in politics and media, where “visibility is key,” public figures become a prime target for nefarious AI use. A threat actor interested in replicating President Trump will have plenty of fodder to create a digital twin, siphoning data of the U.S. leader in different settings.

CONGRESS MUST STOP A NEW AI TOOL USED TO EXPLOIT CHILDREN

“The more video I can get on, how he walks, how he talks, how he behaves, I can feed that into the AI model and I can make deepfake that is as realistic as President Trump. And that’s where things get really, really scary,” Cole added.

In addition to taking on the personal responsibility of quartering off personal data online, Cole said legislation may be another method to curtail the improper use of AI.

Sens. Ted Cruz, R-Texas, and Amy Klobuchar, D-Minn., recently introduced the Take it Down Act, which would make it a federal crime to publish, or threaten to publish, nonconsensual intimate imagery, including “digital forgeries” crafted by artificial intelligence. The bill unanimously passed the Senate earlier in 2025, with Cruz saying in early March he believes it will be passed by the House before becoming law.

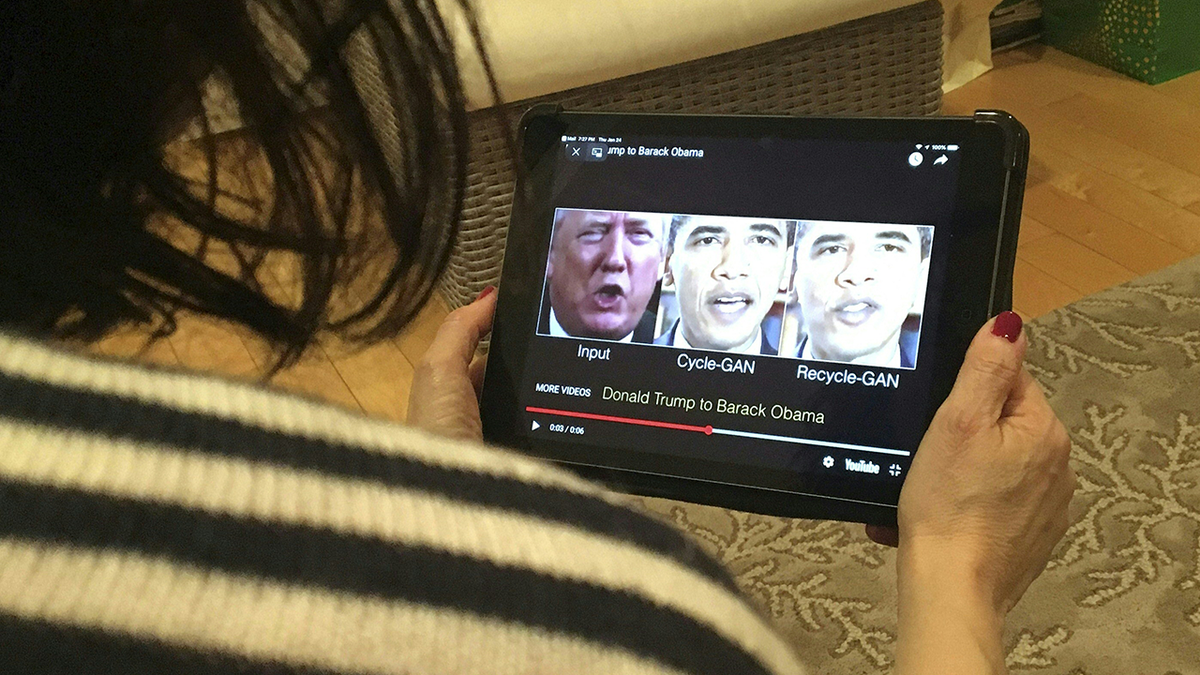

First lady Melania Trump traveled to Capitol Hill on Monday for a roundtable to rally support for the Take It Down Act. (Fox News)

The proposed legislation would require penalties of up to three years in prison for sharing nonconsensual intimate images — authentic or AI-generated — involving minors and two years in prison for those images involving adults. It also would require penalties of up to two and a half years in prison for threat offenses involving minors, and one and a half years in prison for threats involving adults.

The bill would also require social media companies such as Snapchat, TikTok, Instagram and similar platforms to put procedures in place to remove such content within 48 hours of notice from the victim.

HIGH SCHOOL STUDENTS, PARENTS WARNED ABOUT DEEPFAKE NUDE PHOTO THREAT

First lady Melania Trump spoke on Capitol Hill earlier this month for the first time since returning to the White House, participating in a roundtable with lawmakers and victims of revenge porn and AI-generated deepfakes.

“I am here with you today with a common goal — to protect our youth from online harm,” Melania Trump said on March 3. “The widespread presence of abusive behavior in the digital domain affects the daily lives of our children, families and communities.”

Andy LoCascio, the co-founder and architect of Eternos.Life (credited with building the first digital twin), said that while the “Take it Down” act is a “no-brainer,” it is completely unrealistic to assume it will be effective. He notes that much of the AI deepfake industry is being served from locations not subject to U.S. law, and the legislation would likely only impact a tiny fraction of offending websites.

National security expert Paul Scharre views a manipulated video by BuzzFeed with filmmaker Jordan Peele (R on screen) using readily available software and applications to change what is said by former president Barack Obama (L on screen), illustrating how deepfake technology can deceive viewers, in his Washington, D.C. offices, January 25, 2019. (ROB LEVER/AFP via Getty Images)

He also noted that the text-to-speech cloning technology can now create “perfect fakes.” While most major providers have significant controls in place to prevent the creation of fakes, LoCascio told Fox News Digital that some commercial providers are easily fooled.

Furthermore, LoCascio said anyone with access to a reasonably powerful graphical processor unit (GPU) could build their own voice models capable of supporting “clones.” Some available services require less than 60 seconds of audio to produce this. That clip can then be edited with basic software to make it even more convincing.

DEMOCRAT SENATOR TARGETED BY DEEPFAKE IMPERSONATOR OF UKRAINIAN OFFICIAL ON ZOOM CALL: REPORTS

“The paradigm regarding the realism of audio and video has shifted. Now, everyone must assume that what they are seeing and hearing is fake until proven to be authentic,” he told Fox News Digital.

While there is little criminal guidance regarding AI deepfakes, attorney Danny Karon says alleged victims can still pursue civil claims and be awarded money damages.

In his forthcoming book “Your Lovable Lawyer’s Guide to Legal Wellness: Fighting Back Against a World That’s Out to Cheat You,” Karon notes that AI deepfakes fall under traditional defamation law, specifically libel, which involves spreading a false statement via literature (writing, pictures, audio, and video).

This illustration photo taken on January 30, 2023, shows a phone screen displaying a statement from the head of security policy at META with a fake video (R) of Ukrainian President Volodymyr Zelensky calling on his soldiers to lay down their weapons shown in the background, in Washington, D.C. (Olivier Douliery/AFP via Getty Images)

To prove defamation, a plaintiff must provide evidence and arguments on specific elements that meet the legal definition of defamation according to state law. Many states have similar standards for proving defamation.

For example, under Virginia law, as was the case in the Depp v. Heard trial, actor Johnny Depp’s team had to satisfy the following elements that constitute defamation:

- The defendant made or published the statement

- The statement was about the plaintiff

- The statement had a defamatory implication for the plaintiff

- The defamatory implication was designed and intended by the defendant

- Due to circumstances surrounding publication, it could incubate a defamatory implication to someone who saw it

“You can’t conclude that something is defamation until you know what the law and defamation is. Amber Heard, for instance, didn’t, which is why she didn’t think she was doing anything wrong. Turns out she was. She stepped in crap and she paid all this money. This is the analysis people need to go through to avoid getting into trouble as it concerns deepfakes or saying stuff online,” Karon said.

Karon told Fox News Digital that AI deepfake claims can also be channeled through invasion of privacy law, trespass law, civil stalking, and the right to publicity.

FEDERAL JUDGE BLOCKS CALIFORNIA LAW BANNING ELECTION DEEPFAKES

The hyper-realistic image of Bruce Willis is actually a deepfake created by a Russian company using artificial neural networks. (Deepcake via Reuters)

“If Tom Hanks had his voice co-opted recently to promote a dental plan, that is an example of a company exploiting someone’s name, image, and likeness, and in that case voice, to sell a product, to promote or to derive publicity from somebody else. You can’t do that,” he said.

Unfortunately, issues can arise if a plaintiff is unable to determine who created the deepfake or if the perpetrator is located in another country. In this context, someone looking to pursue a defamation case may have to hire a web expert to find the source of the content.

If the individual or entity is international, this becomes a venue issue. Even if a person is found, a plaintiff must determine the answer to these questions:

- Can the individual be served?

- Will the foreign nation help to facilitate this?

- Will the defendant show up to the trial?

- Does the plaintiff have a reasonable likelihood of collecting money?

If the answer to some of these questions is no, investing the time and finances required to pursue this claim may not be worth it.

“Our rights are only as effective as our ability to enforce them, like a patent. People say, ‘I have a patent, so I’m protected.’ No, you’re not. A patent is only as worthwhile as you’re able to enforce it. And if you have some huge company who knocks you off, you’re never going to win against them,” Karon said.

CLICK HERE TO GET THE FOX NEWS APP

Fox News’ Brooke Singman and Emma Colton contributed to this report.